remember some 20 years ago when a small startup called Google gained my admiration and loyalty by heading their corporate code of conduct with the words, “Don’t be evil.”

Although everyone holds their own idea of what ‘evil’ means, for a corporation I think a fair definition is that human well-being, whether individual or societal, contains more value than any potential profit. By no means is this an unassailable definition, but it’s a good approximate guide for judgment: is a company always putting human well-being before profits? If so, I’m willing to say that they’re not being evil. And Google, for a time, probably fit into my sense of ‘not evil’.

Market pressure, stock prices, company valuations, dividends, investor returns, executive salaries, quarterly earnings reports: many things conspire to lead a company toward potential evil if the management takes its hands off the wheel for even a moment. Too often, they intentionally steer into the abyss.

When Google spawned their parent company, Alphabet, they changed their motto to ‘Do the right thing.’ I knew they’d driven over the cliff, giving in to the baser instincts of corporate hunger. ‘Do the right thing’ is eerily nebulous and easily duplicitous. Do the right thing for whom: the public, the environment, employees; or venture capitalists, stock holders and executives? Maybe “the right thing” is increasing profits by hook or by crook. Maybe the right thing requires a clever justification of evil when the financial upside is too good to ignore.

Google does creepy stuff—tracking our location, recording every search term we enter, what we click on, our phone number, our contacts, who we call and how long we spend watching porn (incognito browsers aren’t very incognito)—but none of this I would label as ‘evil’; unsettling as hell, sure, but not ‘evil’. But a couple of weeks ago Google jumped into the medical A.I. game, and they’re set to release a maelstrom of human misery on a scale never before possible. Calling Google evil no longer feels dubious.

A Brief Primer on A.I.

A couple of decades ago Artificial Intelligence (AI) existed primarily in fiction, but today AI lives in the real world, embedded in thermostats, refrigerators, smart phones, cars and even hearing aids. Prognosticators issue dire warnings and evangelicals wax poetic about the rise of AI. As far as the soothsayers go, we have one of two futures ahead of us, The Terminator—where AI is trying to exterminate us—or Wall-E—where AI makes us cripplingly comfortable (I’m not sure which is worse).

At present, however, AI is anything but intelligent and probably a century or more away from resembling human intelligence and even longer before reaching a state of consciousness (if we can even recognize it as consciousness when or if it happens considering that we still don’t know how human consciousness works, the classic mind-body problem).

These scenarios lack credibility because of who they come from. The most bombastic accounts about the future of AI come from people who have never studied the theory behind how current AI systems work and they assuredly have never written an AI algorithm; the more sober, but no less sensational accounts come from corporate spokespeople whose titillating remarks might cause a rise in stock prices.

I, on the other hand, am an experienced realist. I don’t work for any corporate interests benefiting from supposed AI miracles and I’ve been toying with AI since before Google existed (when AI was an entirely different beast) and even recently wrote an AI program based on one of the most advanced algorithms yet published[1]. Aside from that, I’ve designed, coded and implemented multiple versions of the precursor to modern AI, called expert systems.

The current generation of AI is an advanced expert system that requires no expertise from the programmer other than the ability to program. AI software tackles a variety of problems, many of which the programmer or software designer might not understand. An expert system, however, contains the wisdom and insights of an expert in the field the software is designed for. Here’s a simple example of the difference between expert and AI from the software system I’m building.

Meal planning is a complex problem when trying to balance a person’s schedule with their health or performance goals. I can’t simply tell a person when they should eat; their schedule might not allow it. By far the most difficult task with any client is arranging their meals appropriately throughout the day.

The expert system I created for this task contains a set of fuzzy rules (fuzzy in the sense of fuzzy logic) that need to be simultaneously satisfied in the most optimal way possible. The system requires around a couple of dozen rules. What’s even more mind numbing is that the rules aren’t static: as meals shift around to different times of the day, the rules also change to fit the new configuration. To find optimal meal plans, I employ what’s called a multi-objective evolutionary algorithm. It sounds fancy, but it’s just a powerful way to find the best set of answers. There’s a set of answers because no one configuration is the absolute best.

The key to this expert system is that it requires an expert to define the rules. Without the fuzzy rules, the system fails to find an answer because it lacks any way to make sense of the question. The role of the expert—in this case, me—is indispensable. My knowledge is baked into the code. Developing a good expert system requires a deep understanding of the problem at hand.

AI does away with the need for the expert. AI can figure out the general rules to use to determine how to optimize the problem at hand, like meal planning. Once the AI discovers the rules, these rules can be applied to each new scenario. Designing the rules is the hardest part of implementing an expert system. AI eliminates the need for an expert who understands the problem. But there’s a caveat…

Before I go on about the shortcomings of AI, I need to talk about modern medicine, how it lost its way and the advent of healthcare.

Not Your Grandmother’s Medicine

One should never choose a creed carelessly. When faced with indecision or chaos, a strong creed provides guidance and stability, helping to right our moral compass if ever tempted astray.

Abandoning a good creed, like ‘don’t be evil’, allows the worst aspects of human nature to seep into the edifice and loosen the foundation. Like Google, the medical profession discarded one of the oldest and noblest of creeds: primum non nocere or above all, do no harm. As a result, medicine transmogrified into a parody of what it once was, dubbing itself with the innocuous name healthcare.

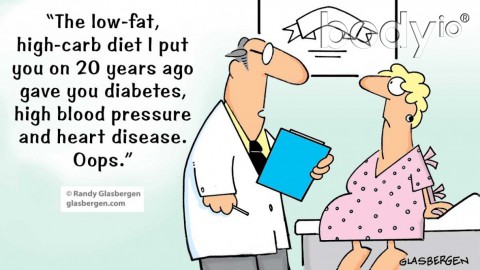

Medicine, prior to the 1970s when ‘do no harm’ reigned, performed the task of healing, providing care to those in need. Medicine treated the sick. Today, the rules differ. No longer does medicine provide treatment for disease. Its successor, healthcare, has a new creed: in health, find sickness; in sickness, find profit. In simpler terms, the authors of “Overdiagnosed: Making People Sick in the Pursuit of Health” describe healthcare as “the drive to turn people into patients”[2].

The pharmaceutical and medical device industries, international organizations and governments orchestrated the shift to a disease profiteering paradigm and I can’t cover the myriad ways in which they’ve accomplished these ends in the limited space here. I go into detail in my forthcoming book, although not as much as in Overdiagnosed and The Tyranny of Health. To discover more, start there. But for now, we can focus on the diagnosis of cancer as one example, as this is where Google appears to be headed.

Phantoms in the Flesh

When I was 21 years old, my doctor diagnosed me with thyroid cancer. Having left the womb with deep inborn skepticism, I don’t believe extra-ordinary claims without extra-ordinary evidence. The evidence, in this case, didn’t convince me. My HDL cholesterol levels were higher than my LDL cholesterol levels which is abnormal. Normally a sign of health, this abnormal state prompted my doctor to look for an ominous explanation. I couldn’t be the healthiest patient he’d ever seen.

After more negative test results (in other words, after confirming I was indeed his healthiest patient), he started feeling around for abnormalities on my liver, around my pancreas and finally on my throat. And there it was: a nodule on my thyroid gland. He told me it was likely cancer and scheduled a biopsy for the next day.

I didn’t go. Over twenty years later, I’m still cancer free with a perfectly functioning thyroid. The nodule regressed on its own.

My doctor treated me under the newly emerged in health, find sickness paradigm. And it was profitable. The testing alone made the hospital hundreds of dollars. Had I continued with the tests, more money would have followed. I have no doubt that the test would have revealed abnormal cells and might have led to surgery and a lifelong dependence on medication.

How can I be so sure that the tests would come back positive and, stranger still, why didn’t I care? Thyroid cancer is one of the fastest growing cancers in America[3], but the number of deaths from thyroid cancer remains constant despite this explosion[2]. Malignant thyroid cancer is not increasing, but the ability to find abnormalities is. In Finland, researchers discovered that everyone, if tested to the best of our abilities for thyroid cancer, would show abnormal cell growth that a doctor would immediately label as cancer[4].

Over-diagnosis, such as this, occurs regularly. Modern medical devices peer beyond the skin with amazing resolution that exposes an array of abnormalities. But no one’s normal, especially as you look closer and closer, as lung cancer testing demonstrates.

A new, high resolution technique called spiral CT scans can detect lung abnormalities at early stages: a boon, sure, until it’s misused. Smokers have a risk of death from lung cancer of about 35 people per 10,000; for non-smokers, the rate is 2 people per 10,000. But a spiral CT scan detects ‘malignant’ cancer in 110 people per 10,000 for smokers and non-smokers[2]. More cancer isn’t detected. Spiral CT scans just demonstrate that people aren’t normal if you look close enough[5]. Spiral CT scans are an expensive (and lucrative) test that says less than the cost-free answer to a simple yes or no question: Do you smoke?

False-positives are on the rise: breast cancer, prostate cancer, lung cancer, thyroid cancer and many others (women seem particularly targeted for over-diagnosis). And getting a false-positive causes psychological trauma that lasts years[6-9]—but we have medication for that too. In the case of adrenal incidentaloma, testing for cancer can cause cancer[10].

With the ever increasing resolution used to see inside the human body, doctors find more and more abnormalities, most of which never become cancer. But doctors train to detect these abnormalities and err on the side of over-diagnosis. Each test ordered, however, increases profits, as does each abnormality mislabeled as cancer.

Perfect Storm

AI as I mentioned above, doesn’t need an expert to design the software. The expertise comes in the form of data, massive amounts of data. Someone creates training data that consists of the raw data and the corresponding set of ‘answers’.

In the case of facial recognition, people sit around and pore over hundreds of thousands of images of people identifying where the human faces are in each image. The data are the unmarked images, the ‘answers’ are the images with the faces indicated. After hours of chugging, the AI develops a pattern of rules to use for identifying faces in pictures. The expertise is not in the software, it’s a direct translation of the expertise of the people who created the answer set.

As a preposterous and laughable thought experiment, imagine that the team tasked with identifying faces in the above example held militant social-justice warrior tendencies and feel that white men have been over identified in history, and so should become faceless in the digital age. They identify and label all faces except those of white men. The AI will learn the group’s bias and become prejudiced against the faces of white men, failing to identify them.

AI inherits the expertise, but also the biases and consequently the goals of the people who create the data set[11]. This example may seem trite, but the problem is real and has infected the US legal system, implementing the same bias against minorities as the humans it learned from.

Imagine training AI from the data created by doctors who themselves are trained to label any abnormality in a medical image as cancer. The AI learns to over-diagnose and errs on the side of too much, just like the doctors. Instead of the days needed by a trained radiologist, however, an AI system can detect abnormalities in seconds. Suddenly, human misery can be mass produced along with the associated costs—both financial and psychological.

This is already happening. The AI, unfortunately, isn’t trained to detect entire outcomes. Instead of being trained on the entire spectrum of information, from medical image, to diagnosis, to biopsy, to results, to treatment and final outcome, the AI is only trained to mimic a doctor’s ability to identify an abnormality as cancer. As a consequence, it’s almost as good at over-diagnosing[12].

If the entire chain of events were used to train the AI, it could conceivably reduce the number of false-positives. But no one, to date, has trained AI in this manner. I imagine that’s because healthcare profits would fall. If the guiding principle is in health, find sickness; in sickness, find profit, then it doesn’t make sense to decrease diagnosis. It makes more sense to speed up the rate of over-diagnosis. How else could one turn a healthy person into a sick patient with blinding efficiency?

Google Goes Malignant

When Google announced the acquisition of a UK based medical AI platform and that it’s “launching hard into the healthcare industry”[13], a shiver raced down my spine. The encroachment of healthcare into our daily lives already frightens, saddens and disgusts me, but this…

Google has a penchant for entering industries and streamlining progress at unfathomable speeds. In an industry focused on turning people into patients into profits, Google is poised to make billions by literally creating disease. Even a false-positive on a cancer test can make someone sick.

I know I’m not more brilliant or perceptive than the combined brain power at the colossus of Silicon Valley. Google knows evil. And they’ve embraced it.

[expand title=”References (click to expand)”]

1. KO Stanley, R Miikkulainen. Evolving neural networks through augmenting topologies. Evol Comput. 2002 Summer; 10(2): 99-127.

2. HG Welch, LM Schwartz, S Woloshin. Overdiagnosed: Making People Sick in the Pursuit of Health. 2011. Beacon Press.

3. Thyroid Awareness – Thyroid Cancer (last accessed Nov 29, 2018).

4. HR Harach, KO Franssila, V Wasenius.Occult Papillary Carcinoma of the Thyroid: A ‘Normal’ Finding in Finland. A Systematic Autopsy Study. Cancer. 1985 Aug 1;56(3):531-8.

5. Gleeson FV. Is screening for lung cancer using low dose spiral CT scanning worthwhile? Thorax. 2006 Jan;61(1):5-7.

6. Brodersen J, Siersma VD. Long-term psychosocial consequences of false-positive screening mammography. Ann Fam Med. 2013 Mar-Apr;11(2):106-15.

7. McNaughton-Collins M, Fowler FJ Jr, Caubet JF, Bates DW, Lee JM, Hauser A, Barry MJ. Psychological effects of a suspicious prostate cancer screening test followed by a benign biopsy result. Am J Med. 2004 Nov 15;117(10):719-25.

8. Gustafsson O, Theorell T, Norming U, Perski A, Ohström M, Nyman CR. Psychological reactions in men screened for prostate cancer. Br J Urol. 1995 May;75(5):631-6.

9. Elmore JG, Barton MB, Moceri VM, Polk S, Arena PJ, Fletcher SW. Ten-year risk of false positive screening mammograms and clinical breast examinations. N Engl J Med. 1998 Apr 16;338(16):1089 96.

10. Cawood TJ, Hunt PJ, O’Shea D, Cole D, Soule S. Recommended evaluation of adrenal incidentalomas is costly, has high false-positive rates and confers a risk of fatal cancer that is similar to the risk of the adrenal lesion becoming malignant; time for a rethink? Eur J Endocrinol. 2009 Oct;161(4):513-27.

11. Char DS, Shah NH, Magnus D. Implementing Machine Learning in Health Care – Addressing Ethical Challenges. N Engl J Med. 2018 Mar 15;378(11):981-983.

12. Wang S, Summers RM. Machine learning and radiology. Med Image Anal. 2012 Jul;16(5):933-51. Review.

13. Google Absorbs Streams App to Create An “AI-Powered Assistant for Nurses and Doctors” (last accessed Nov 29, 2018).

[/expand]